Beyond Goroutines: Exploring Advanced Concurrency Patterns in Go - Part 2

On Go's singleflight and errgroup packages, and other useful design patterns for concurrency

Last week we’ve delved into the basics of golang’s concurrency, how goroutines are scheduled and ways of synchronisation. This week we’ll take a closer look into some advanced patterns, how they work and when it’s best to use them.

Package Singleflight

provides a duplicate function call suppression mechanism.

executes and returns the results of the given function, making sure that only one execution is in-flight for a given key at a time. If a duplicate comes in, the duplicate caller waits for the original to complete and receives the same results.

especially useful in scenarios where multiple goroutines may request the same data simultaneously; makes sure that a specific piece of work is done only once, even if multiple goroutines request it simultaneously; suited for optimising your code by removing redundant processes that generate the same result.

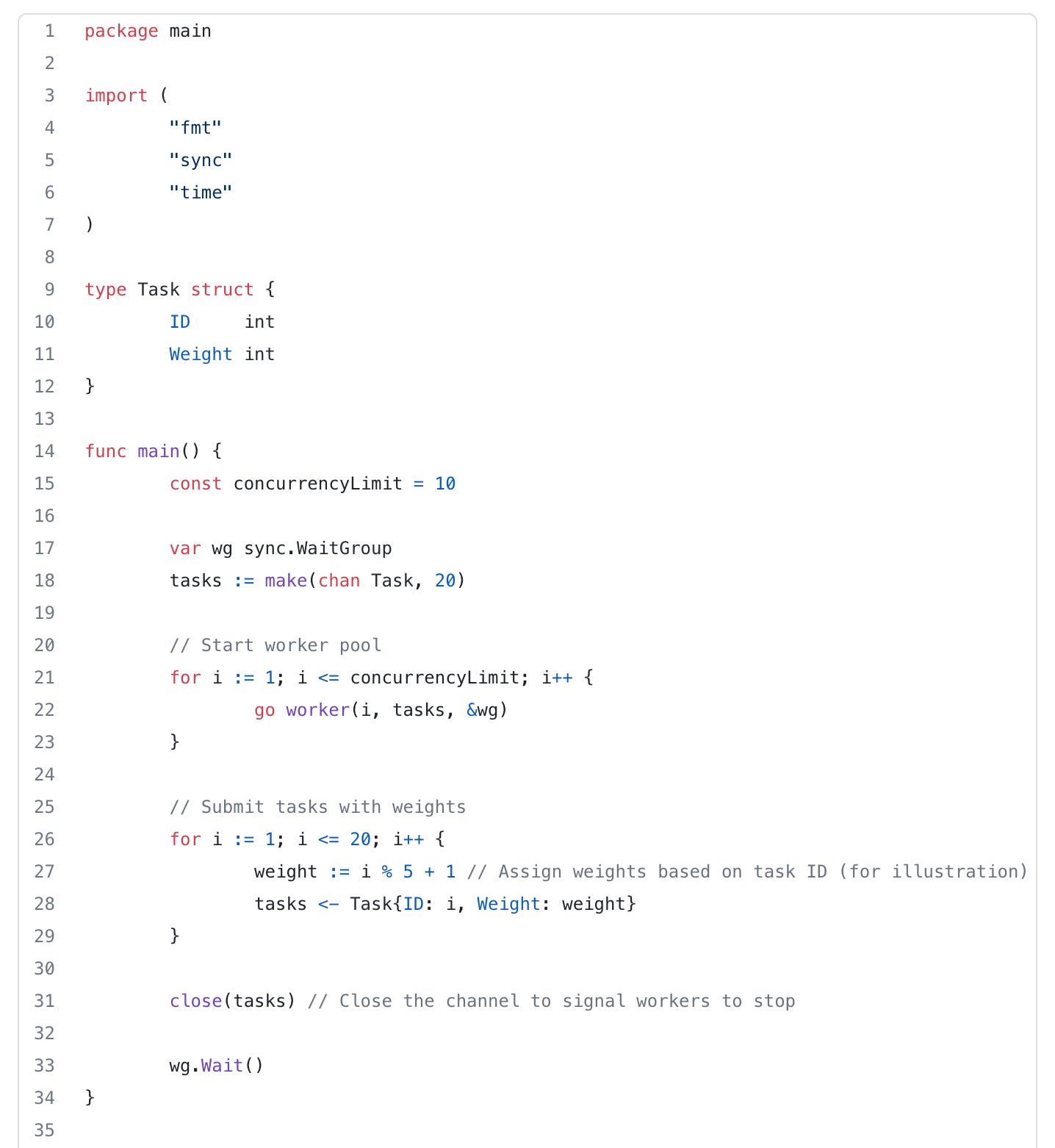

Let’s say we have the following example:

The fetchData function simulates fetching data. The singleflight.Group is used to manage concurrent requests for the same key. Each goroutine calls sf.Do with a unique key. The function passed to sf.Do is executed only once for a given key, and subsequent goroutines with the same key will wait for the initial call to complete. The sync.WaitGroup is used to wait for all goroutines to finish.

Use Cases:

Cache Loading - to avoid redundant fetching or computation, making sure the operation is only done once.

Resource Intensive Operations - for fetching data or performing an operation that is expensive and should be done only once even if requested by multiple goroutines simultaneously.

Rate Limiting - for limiting the rate at which a specific function is executed to avoid exceeding certain limits.

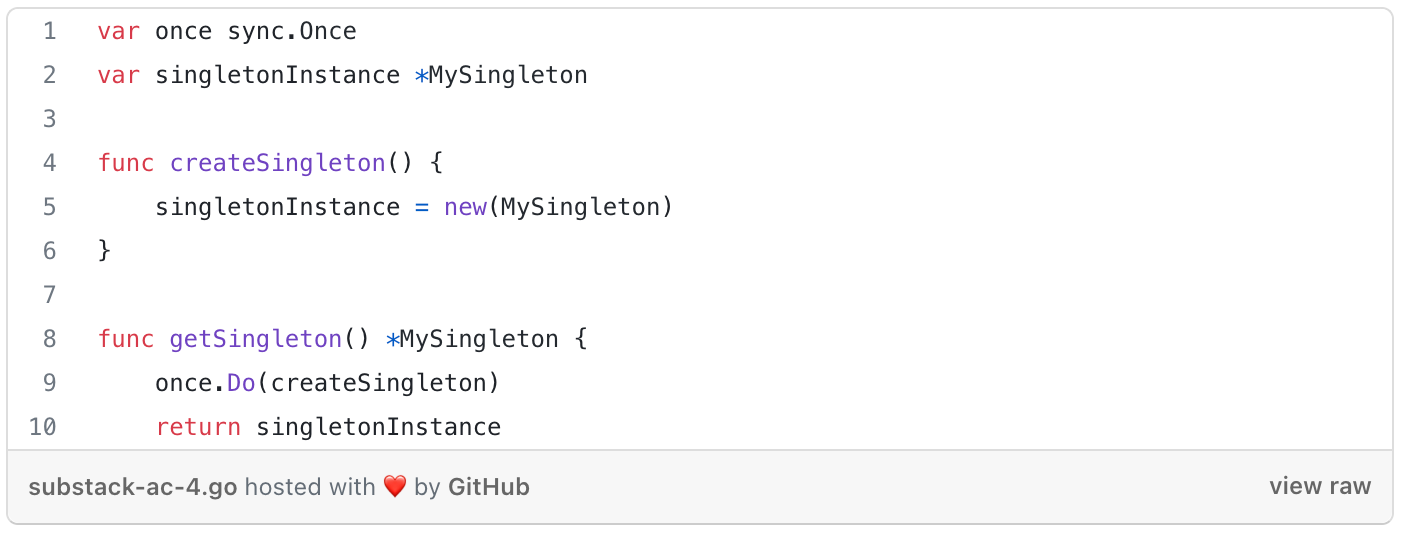

Sync’s Once Function

provides a mechanism to ensure that a particular function is executed only once, regardless of the number of goroutines calling it.

useful for lazy initialisation, one-time setup, or any scenario where a specific action needs to be performed exactly once in a concurrent program.

Use Cases:

Lazy Initialisation -

sync.Oncecan be used for lazy initialisation of resources that are expensive to create or set up. The initialisation function will be executed only when the resource is first accessed.

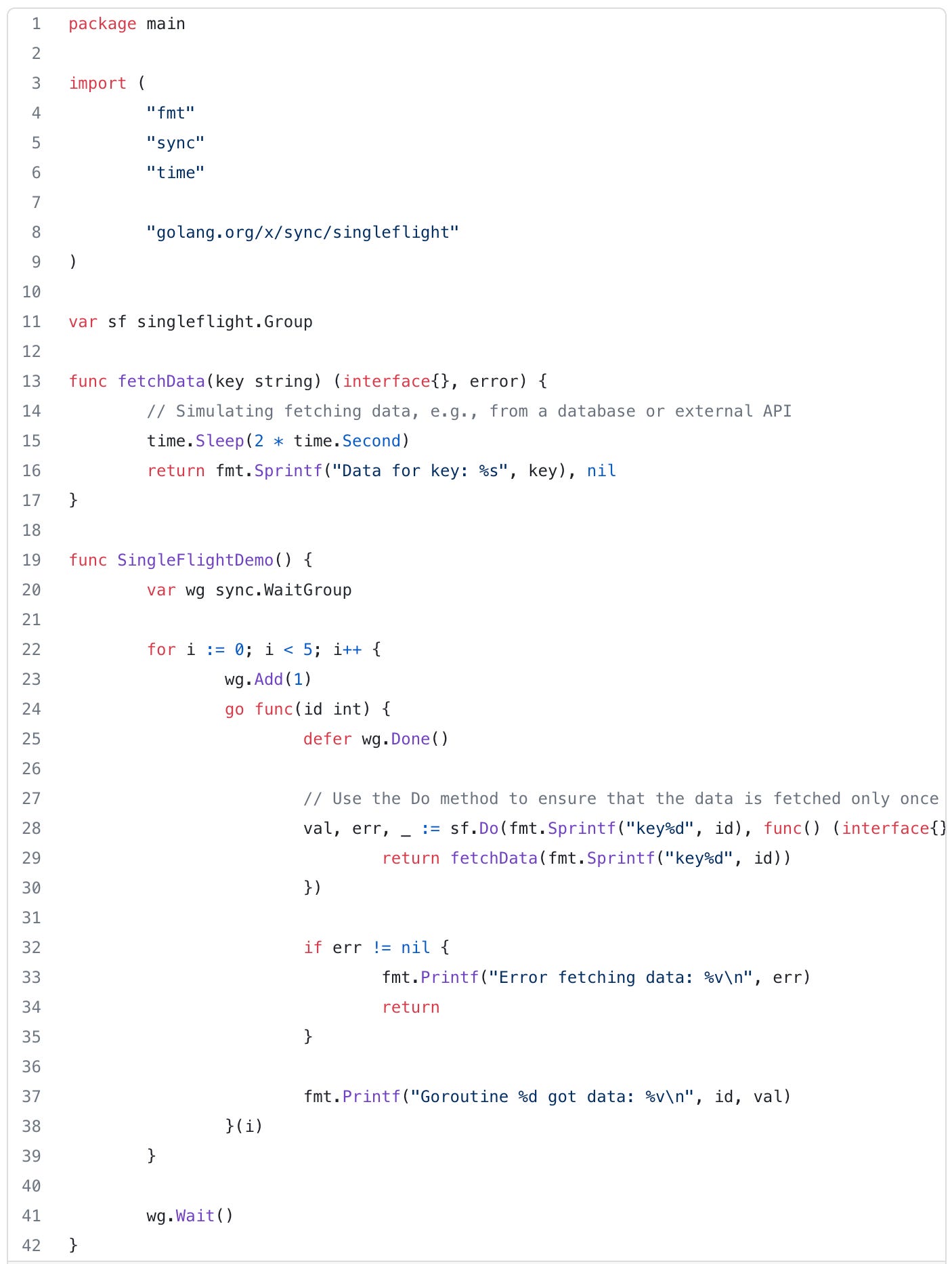

Global Initialisation - When you have global variables or settings that need to be initialised before the application starts,

sync.Oncecan ensure that the initialisation function is executed only once.

Singleton Pattern -

sync.Oncecan be used to implement a simple form of the singleton pattern, ensuring that an instance of an object is created only once.

Resource Cleanup -

sync.Oncecan be used to perform resource cleanup or teardown operations exactly once, ensuring that cleanup is not duplicated.

Singleflight and sync.Once might look like they both achieve the same purpose but that’s not the case.

singleflight:You want to limit the concurrency of a specific function to avoid redundant work.

You are dealing with scenarios where multiple goroutines may request the same data or perform similar work concurrently.

sync.Once:You want to ensure that a particular function is executed exactly once, regardless of the number of goroutines calling it.

You are dealing with scenarios like lazy initialization, global setup, or singleton pattern implementation.

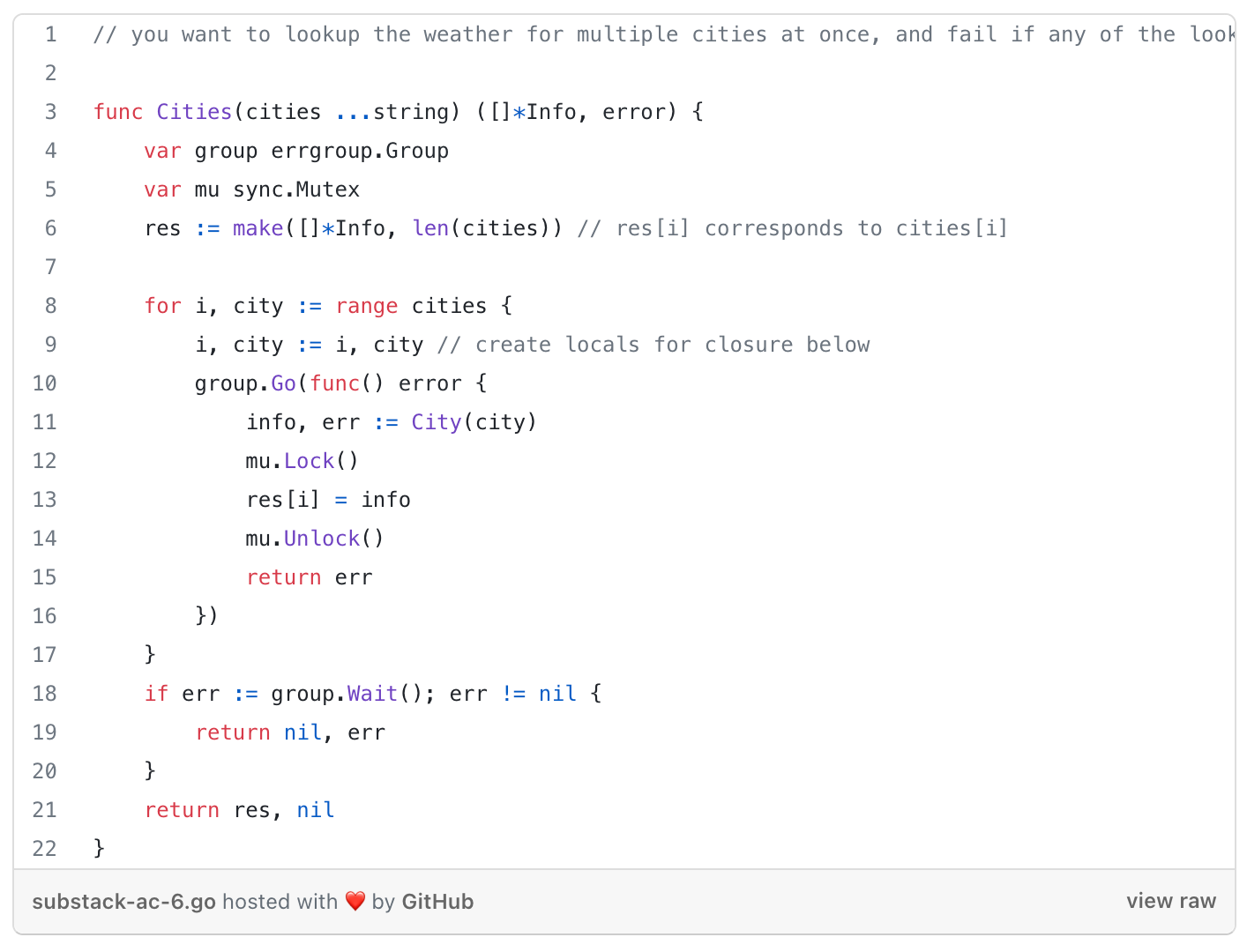

Errgroup

similar to

sync.WaitGroupbut where the tasks return errors that are propagated back to the waiter.useful when you have multiple operations that you want to wait for, but you also want to determine if they all completed successfully.

errgroupis designed to manage a group of goroutines. You can add goroutines to the group, and the group will keep track of their execution. If any goroutine in the group returns an error, it cancels the entire group, causing all other goroutines to be signaled to stop. This helps to prevent goroutines from continuing to run when an error has occurred. TheWaitmethod oferrgroup.Groupcan be used to block until all goroutines in the group have completed their execution. TheWaitmethod returns an error if any of the goroutines in the group returned an error. This allows you to handle errors centrally.

Use cases:

Fan-Out Concurrency - When you need to perform multiple independent tasks concurrently (fan-out), and you want to wait for all of them to complete or return on the first error.

Error Handling - When you want to handle errors collectively instead of checking errors from each goroutine individually.

Context Cancellation -

errgroupintegrates well with the Go context package, allowing you to use context cancellation to stop the entire group if needed.

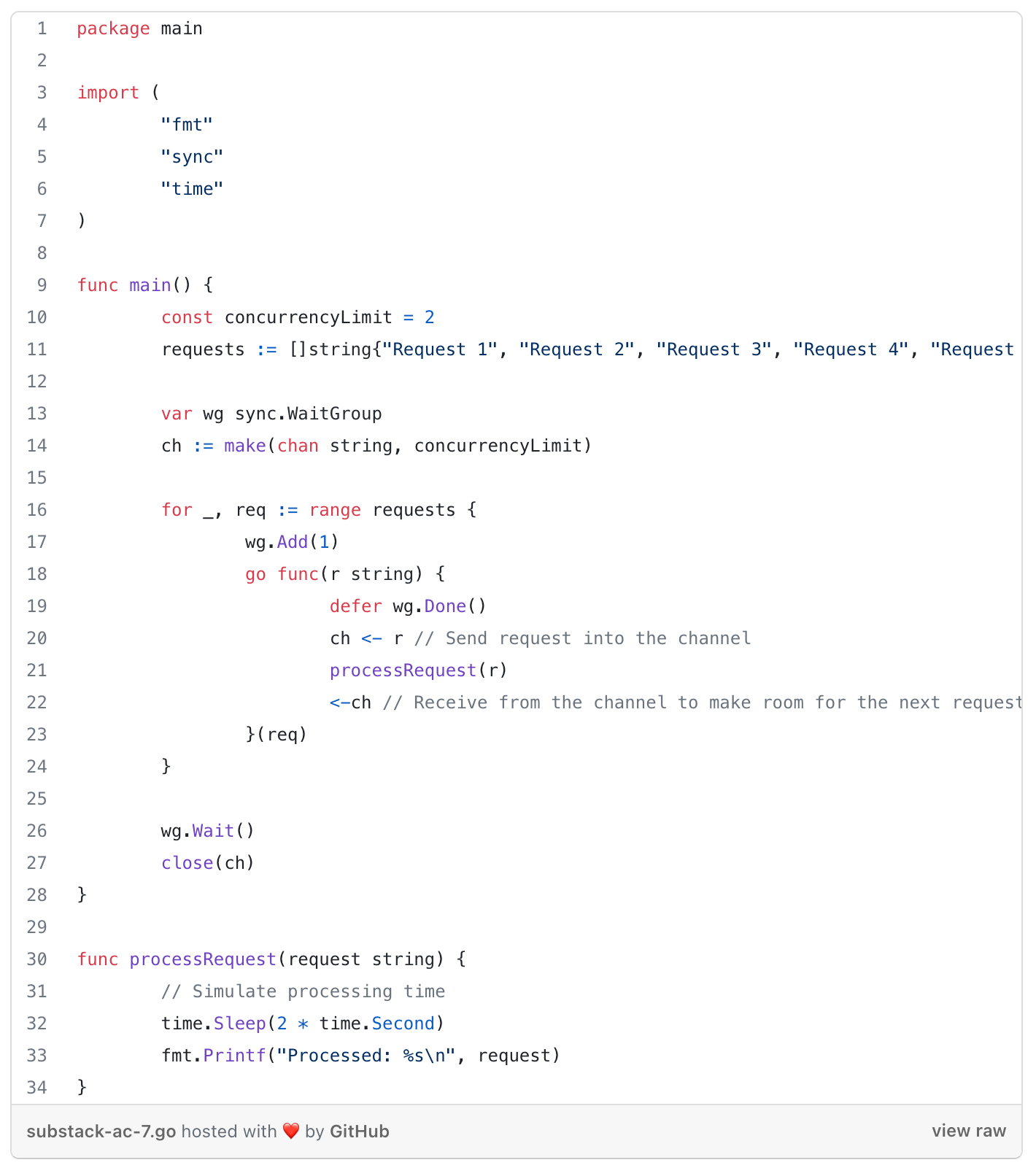

Bounded concurrency via buffered channels

pattern where you use channels with a specified capacity (buffered channels) to control the level of concurrency.

can be used to limit the number of goroutines actively working on a task, preventing unbounded resource consumption.

useful in situations where you want to limit the number of simultaneous network requests, file I/O operations, or database queries to avoid overwhelming external resources.

Use cases:

Rate Limiting - When you want to limit the rate at which certain operations are performed, such as API requests, file reads/writes, or database queries.

Resource Constraints - When you need to prevent unbounded resource consumption by limiting the number of concurrent operations.

Let’s say we want to control the rate of our API requests by allowing only two requests to be processed concurrently. In the following example, the processRequest function simulates the processing of each request and we limit the concurrency to 2 using a buffered channel (ch) with a capacity of 2.

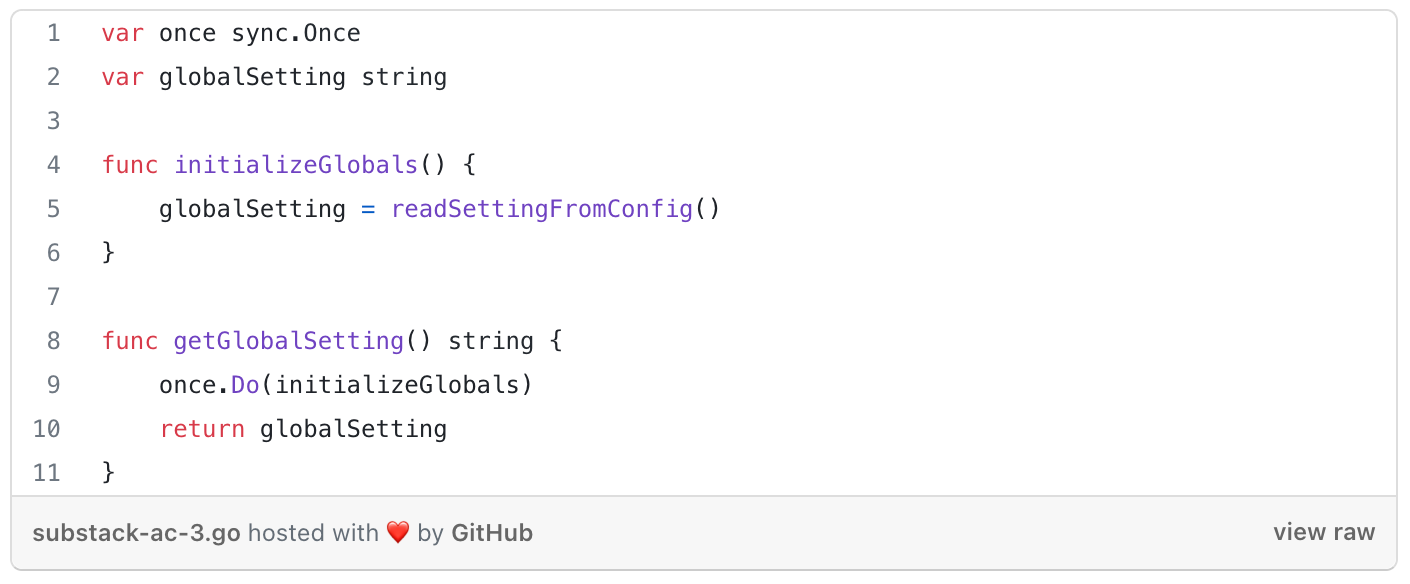

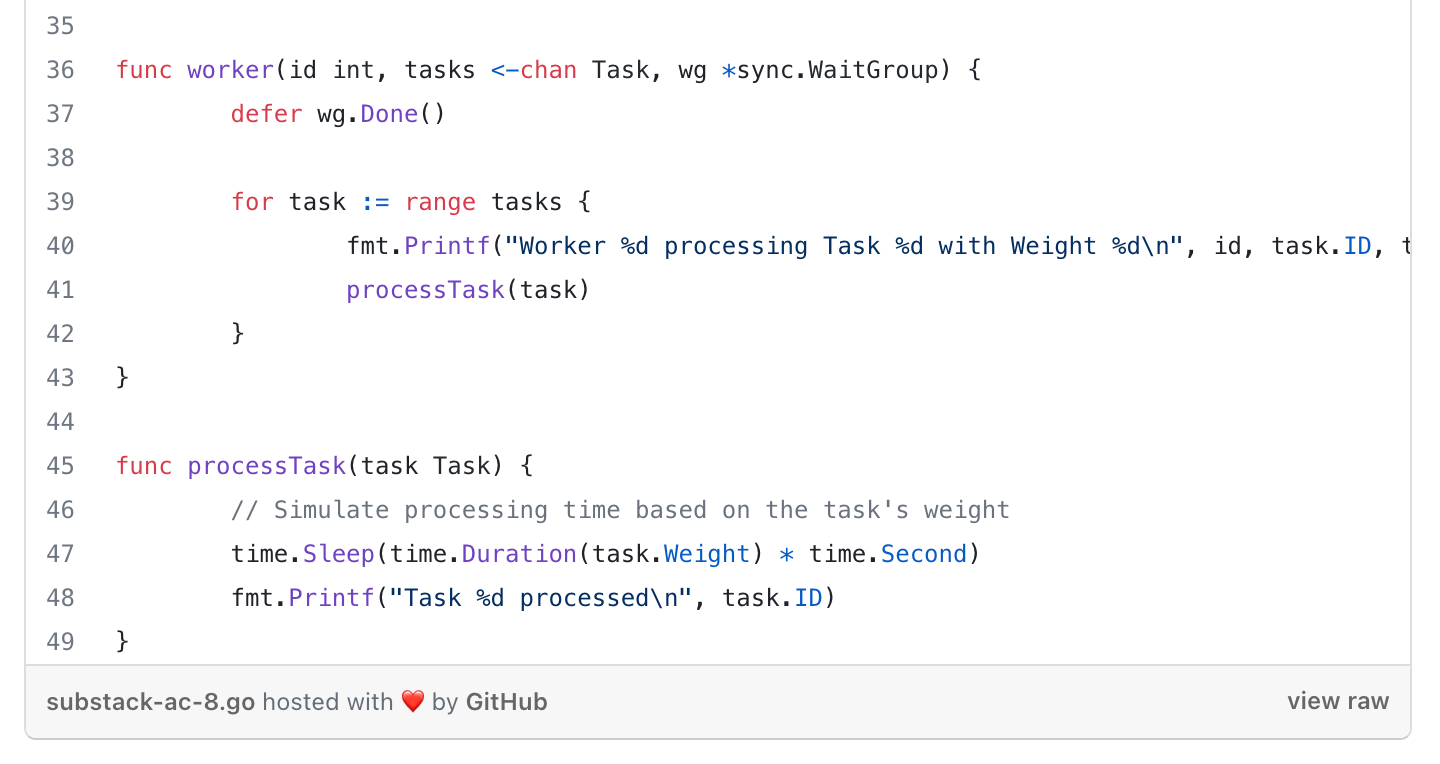

Weighted bounded concurrency

in some cases, not all tasks are equally expensive. So instead of reasoning about the number of tasks we want to run concurrently, we come up with a "cost" for every task and acquire and release that cost from a semaphore.

mechanism where tasks are assigned different weights, and the system ensures that the sum of weights of concurrently executing tasks does not exceed a certain limit.

allows for fine-grained control over the level of concurrency based on the computational or resource intensity of each task.

Use cases:

Resource Management - When tasks have varying resource requirements, and you want to ensure that resource-intensive tasks do not overwhelm the system.

Fine-Grained Control - When you need fine-grained control over the level of concurrency based on the specific characteristics of each task.

Adaptive Scaling - When the system needs to adaptively adjust the concurrency limit based on factors such as system load, resource availability, or external conditions.

In the above example, tasks are assigned weights based on their IDs (for illustration purposes). The worker goroutines read tasks from the tasks channel and process them, taking into account their weights. The concurrencyLimit ensures that the sum of weights of concurrently executing tasks does not exceed the specified limit.

Those concurrency mechanisms provide a way to allow you to build highly efficient and responsive systems. Whether handling complex distributed scenarios or managing parallel execution of tasks, Golang's concurrency features contribute to the development of robust and scalable applications in a concurrent world.