Crafting Conversations: Techniques and Tips for Effective Prompting

On types of generative AI, prompt engineering, best practices and techniques for prompting

Generative AI is an artificial intelligence that is capable of generating various types of new content, including text, images, audio, videos, and synthetic data. Compared to the other types of AI that rely on preexisting data to make decisions, generative AI learns patterns and relationships in the input data and generates new and unique data.

Generative AI models can be classified into one of the following categories depending on the task they perform:

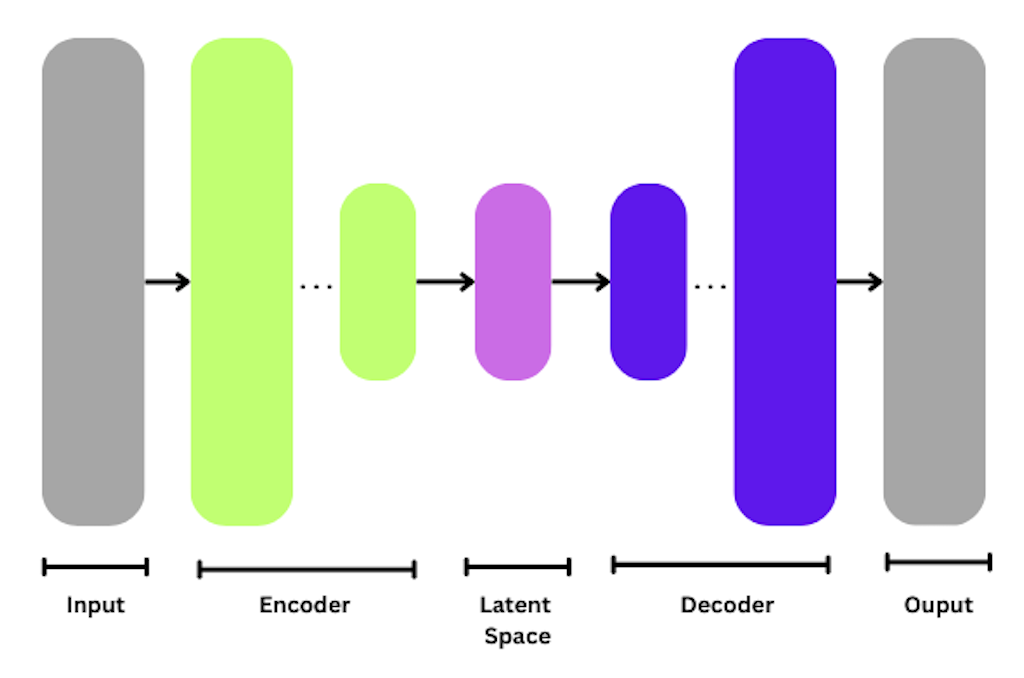

Variational autoencoders (VAEs) = type of neural network based on an encoder-decoder structure, that learns a compressed representation of the input data (aka the latent space) and can then generate new examples by sampling from this latent space. The encoder transforms input data into a latent form, and the decoder aims to reconstruct the original data based on this latent representation.

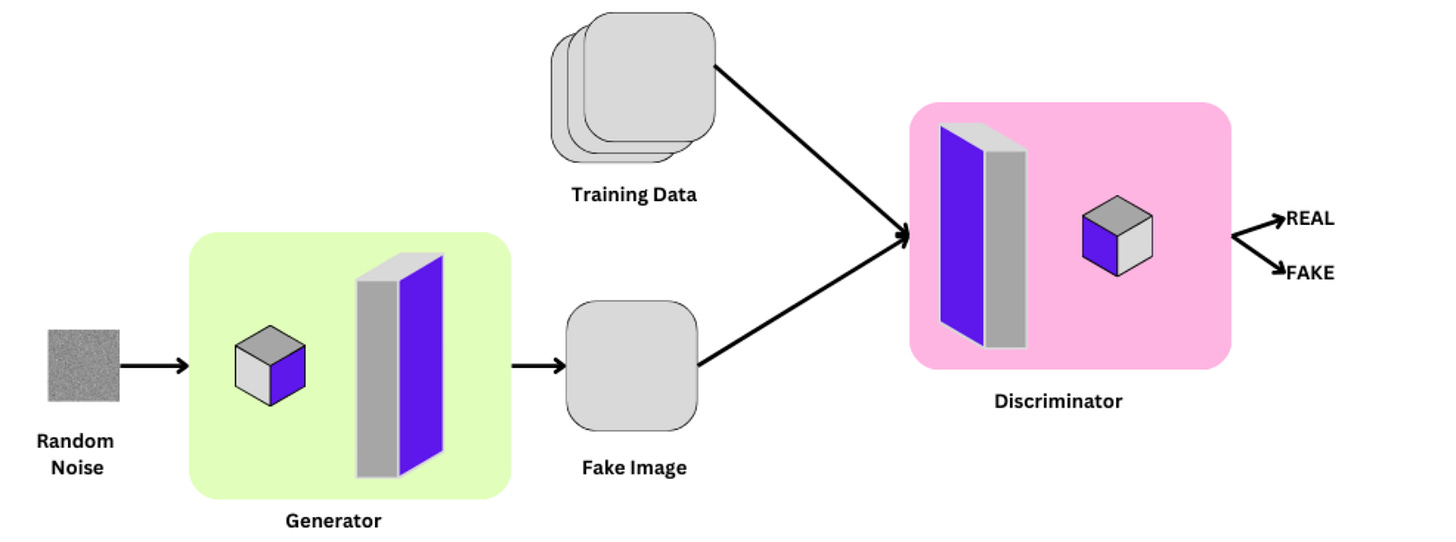

Generative adversarial networks (GANs) = type of neural network that can generate new data similar to a given dataset. They are trained in an adversarial process where a generator network generates data samples, and a discriminator network evaluates the generated samples and determines if they are real or fake. The generator network is trained to improve its ability to generate realistic data by trying to trick the discriminator network.

Transformers = type of neural network used extensively for NLP tasks, such as language translation and text generation. It relies on self-attention mechanisms to learn contextual relationships between words in a text sequence. Fast to train and easily parallelizable.

Autoregressive models = type of model that generates new data similar in distribution to the training data. It is usually well-suited for generating sequential data, such as time series or text, where each new value depends on previous values.

Large language models

Or LLMS, are a type of machine learning model that can generate natural language text with impressive quality and fluency. They are trained on massive text datasets using deep neural network architectures, such as transformers, and can learn to predict the probability distribution of words in a text sequence. Due to their ability to understand and generate human-like text, they have a wide variety of applications:

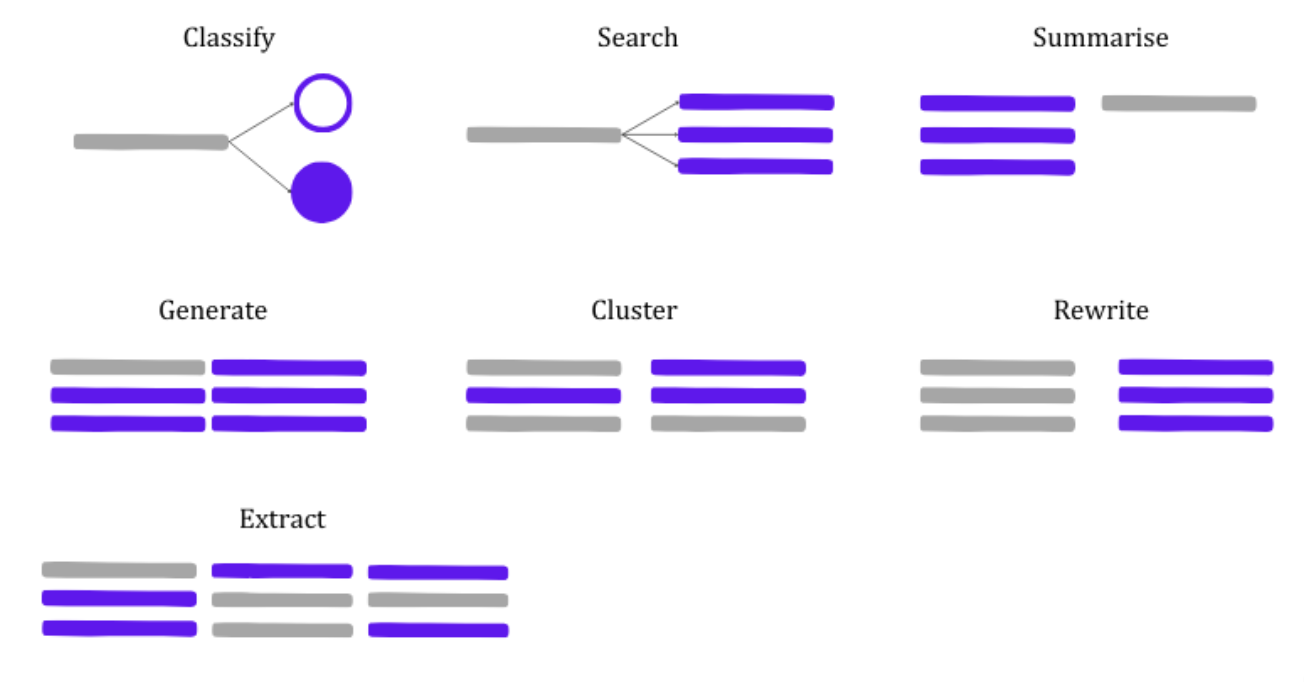

Text Generation - LLMs can generate coherent and contextually relevant text based on a given prompt. This is useful for chatbots, content creation, and creative writing assistance.

Summarisation - LLMs can be employed to summarise long pieces of text, extracting key information and condensing it into a more concise form.

Classification - LLMs can classify text into predefined categories or labels, making them useful for tasks such as spam detection, topic categorization, and sentiment classification.

Rewriting - Due to their ability to understand context, grammar, and semantics, LLMs can generate alternative versions of a given text while preserving its meaning.

LLMs can be classified into:

Base LLMs - smaller and simpler versions of LLMs. Have fewer parameters, which makes them efficient to train and use. Used for smaller NLP tasks like sentiment analysis, text prediction, and text classification.

Fine-tuned LLMs - large language models built on a base LLM but which have undergone additional training on a specific, often narrower, dataset to adapt the pre-trained model to a particular task or domain. They are usually further refined using the process of reinforcement learning with human feedback (RLHF), where humans test and correct the responses for reliability.

Prompt Engineering

A prompt is an input provided to the model in order to generate a response or prediction. The prompt can be a sentence, a question, a paragraph, or an instruction.

A prompt is made up of elements to make it more effective and efficient. These elements can vary depending on the context and the purpose for which it is being used. A prompt can be split up in:

instruction = guidance or input provided to a language model in the form of a prompt or query.

Instruction: "Generate a creative story about a mysterious journey."context = information or background provided to a language model alongside the primary prompt or query. This additional context aims to guide the model's understanding and influence its responses. It can include information such as the intended audience, the purpose of the task, and any relevant details that provide additional information about the task at hand.

Instruction: "Continue the conversation between Alice and Bob."

Context: "Alice and Bob are old friends meeting after years. They are discussing their favourite memories together."constraints = limitations or conditions imposed on the prompts or inputs given to a language model. It ensures that the generated content meets criteria or conforms to certain rules. They can also be used to encourage creativity or to introduce additional challenges or limitations.

Instruction: "Write a summary of the article."

Context: "The article is about the impact of climate change on biodiversity."

Constraints: "Ensure the summary is no longer than 150 words."format = the structure or layout of the generated text that the prompt is intended to produce.

Instruction: "Compose a formal response to a customer query."

Context: "The customer is inquiring about the status of their recent order."

Constraints: "Provide accurate information without revealing internal processes."

Format Specifications: "The response should be written in a professional and reassuring tone."Prompt engineering = the process of designing effective prompts for generative AI models.

Prompt Engineering Best Practices

Prompt engineering plays a crucial role in obtaining desired and controlled results from language models. Here are some things to keep in mind:

Simplicity - Clearly articulate the task or request in your instruction to guide the model effectively. Avoid ambiguity to ensure the model understands the user's intent

❌ "In a lengthy manner, elaborate on the implications of the technological advancements witnessed in recent years."✅ "Summarise the latest technological advancements witnessed this year in the artificial intelligence domain."Context and Constraints - This helps the model understand the setting, characters, or background information, leading to more coherent and context-aware responses.

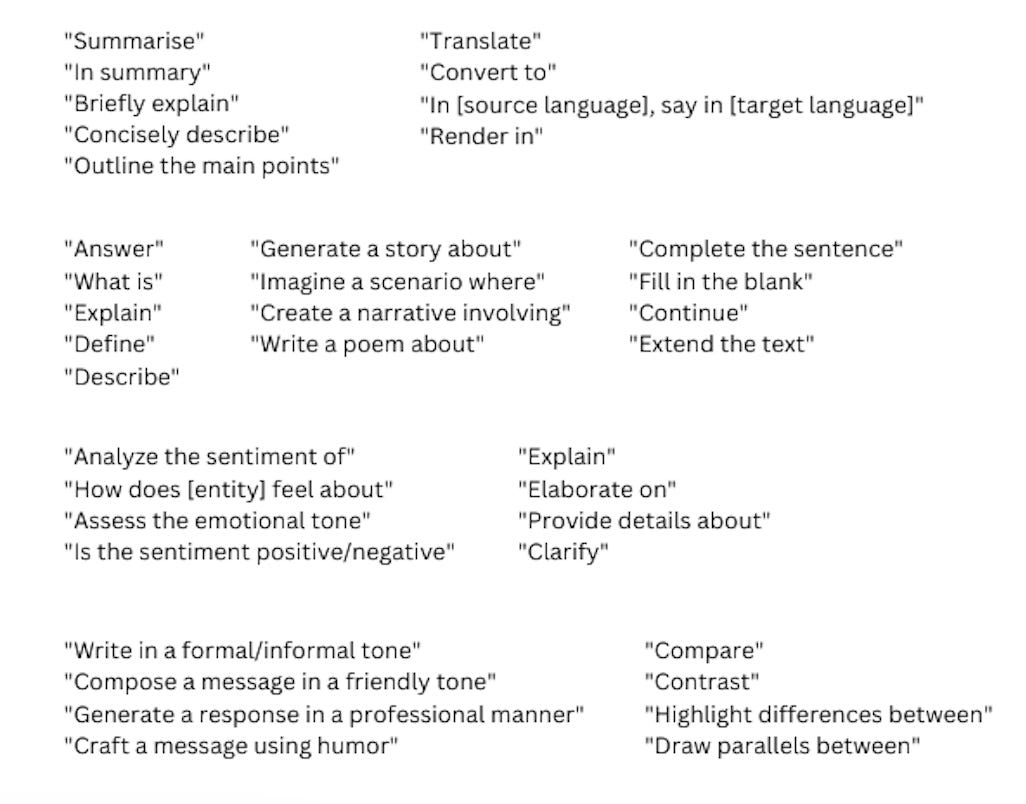

❌ "Continue the conversation between two people."✅ "Continue the conversation between Alice and Bob. They are discussing their recent trip to Paris."Use Keywords - use specific words or phrases that convey the intended meaning and guide the natural language processing model toward generating the desired output

Role Prompting

= technique used in fine-tuning or training language models, where the input prompt is designed to guide the model to perform a specific role or task. This technique is often employed to tailor a language model's behaviour for a particular application or domain. The concept involves providing the model with explicit instructions regarding the role it should assume in generating responses.

Examples:

Creative writing role

Prompt: "You are a storyteller. Craft an engaging short story set in a post-apocalyptic world." Film critic role

Prompt: "You are a film critic. Write a review for the last season of Ted Lasso, discussing its strengths and weaknesses."Travel guide role

Prompt: "You are a travel guide. Write a compelling description of Madeira, emphasising its cultural and historical significance."Techniques of prompting

Zero-shot, one-shot and few-shots prompting

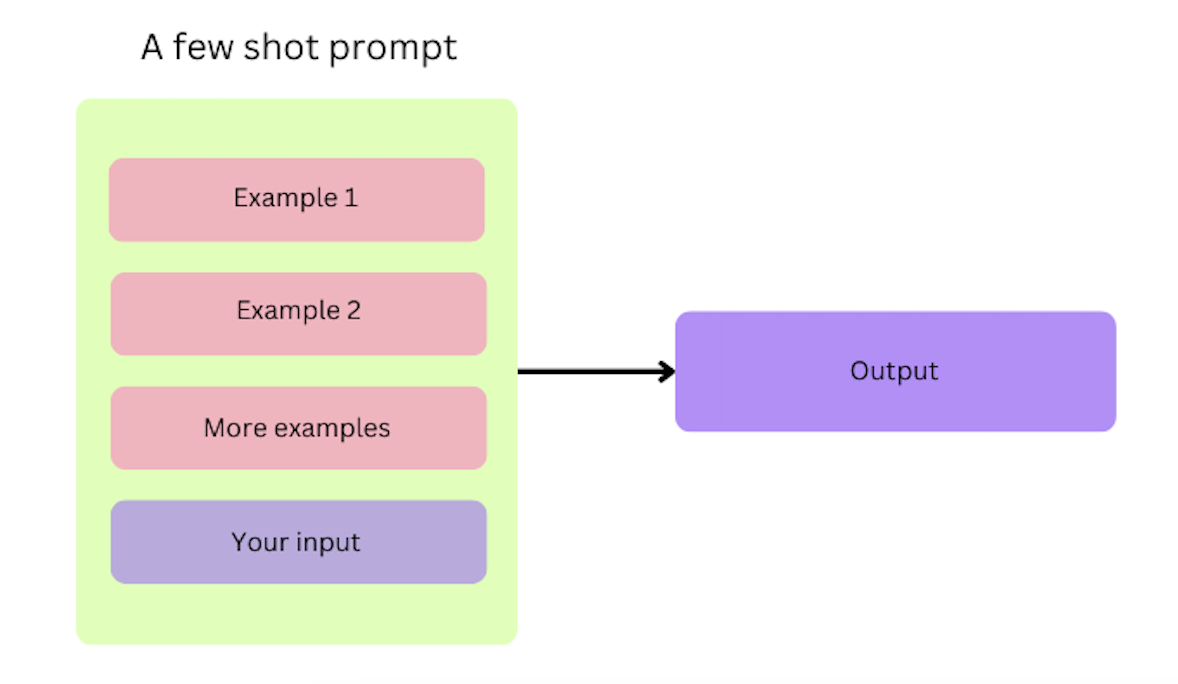

Those techniques are related to the number of examples or instances provided to a language model during the prompting process. Zero-shot prompting is where a model makes predictions without any additional training, while one-shot prompting involves a single example or template, and few-shot prompting uses a small amount of data, usually between two and five.

Zero-shot prompt:

What is 2+2?One-shot prompt:

3+3 is 6

What is 2+2?Few-shot prompt:

3+3 is 6

5+5 is 10

What is 2+2?Chain-of-Thought Prompting (CoT)

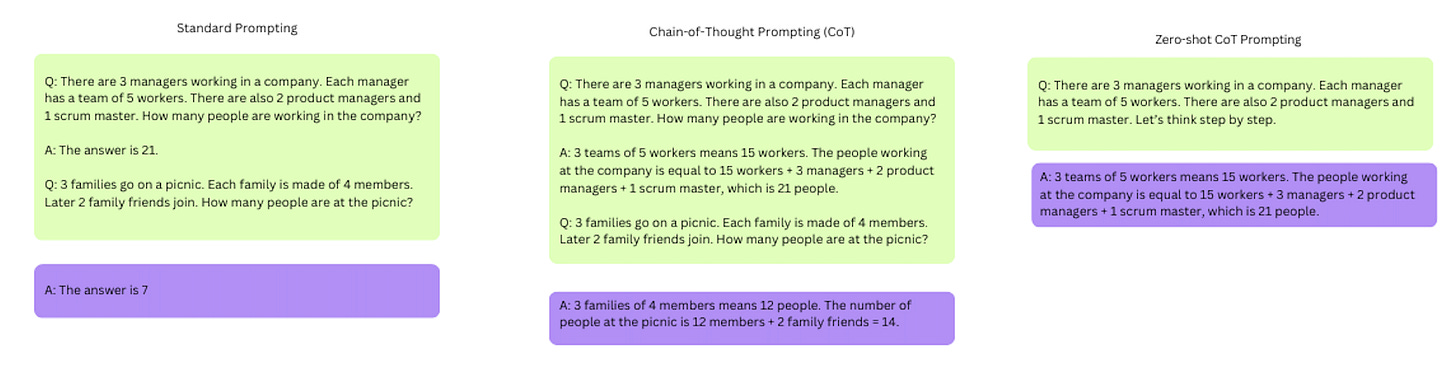

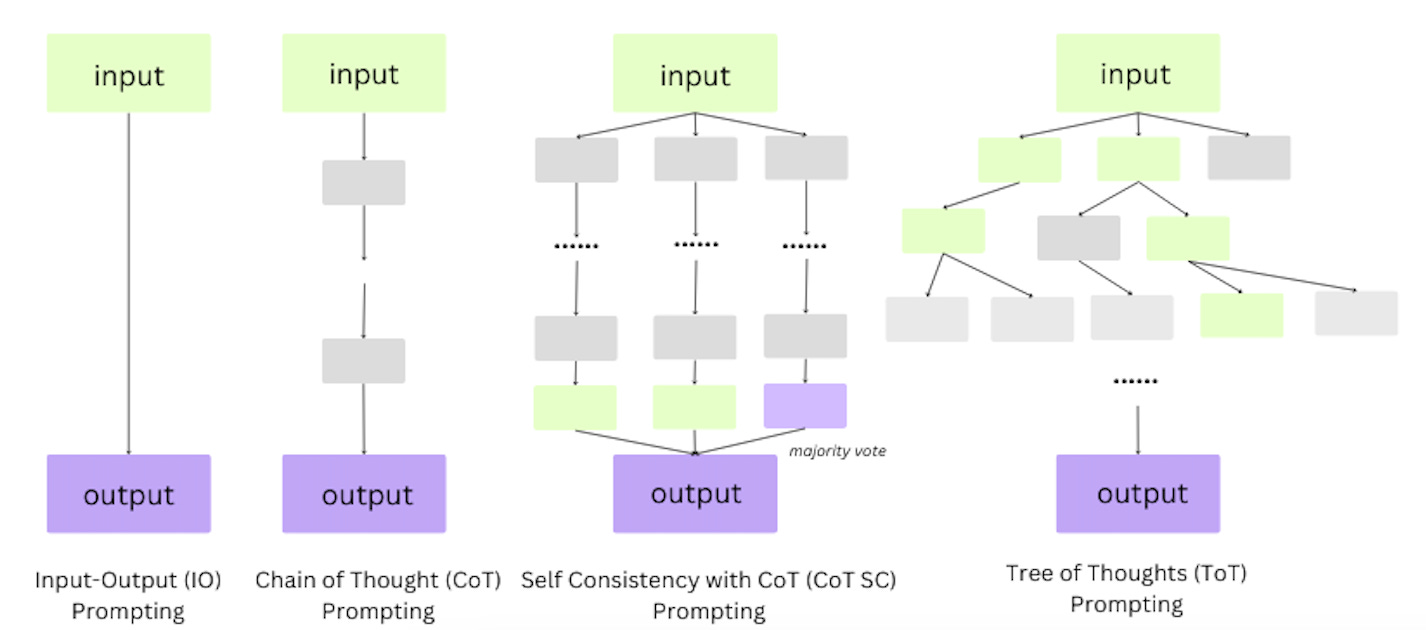

CoT - introduced by Wei et al. (2022), the idea is to guide the model through a logical sequence of prompts, where each prompt contributes to the overall context and influences the subsequent responses. This approach is particularly useful for tasks that require a continuous and connected narrative, conversation, or argumentation.

Zero-Shot CoT - introduced by Kojima et al. 2022, adds a simple prompt like “Let’s think step by step” after the question to facilitate the reasoning chains in LLMs.

Prompt: There are 3 managers working in a company. Each manager has a team of 5 workers. There are also 2 product managers and 1 scrum master. Let’s think step by step.Answer: 3 teams of 5 workers means 15 workers. The people working at the company is equal to 15 workers + 3 managers + 2 product managers + 1 scrum master, which is 21 people.Manual-CoT, usually as few-shot CoT, relies on manually designed examples, where each example consists of a question and a reasoning chain leading to the answer.

Prompt:

Q: There are 3 managers working in a company. Each manager has a team of 5 workers. There are also 2 product managers and 1 scrum master. How many people are working in the company?

A: 3 teams of 5 workers means 15 workers. The people working at the company is equal to 15 workers + 3 managers + 2 product managers + 1 scrum master, which is 21 people.

Q: 3 families go on a picnic. Each family is made of 4 members. Later 2 family friends join. How many people are at the picnic?

Answer: 3 families of 4 members means 12 people. The number of people at the picnic is 12 members + 2 family friends = 14.Automatic Chain-of-Thought (Auto-CoT) - introduced by Zhang et al. (2022) to eliminate the manual efforts of Manual-CoT. Auto-CoT has 2 main steps:

Question Clustering: First, they partition questions of a given dataset into a few clusters. So, if people asked the model a bunch of questions about gardening, like "How do I care for an Alocasia Wentii?" and "What time of the year should I plant tulips?" these would go into different groups.

Demonstration Sampling: Once they have these question groups, they pick one question from each group and use Zero-Shot CoT prompt (the “Let’s think step by step” prompt). This way, the model generates clear and straightforward instructions on auto-pilot.

Code for Auto-CoT is available github.

Self-Consistency

Self-consistency, proposed by Wang et al. (2022), is a method that involves presenting the same prompt to a model multiple times and then determining the final answer based on the majority result.

Prompt: How many months have 29 days?Answer: All 12 months have at least 29 days. In leap years, February has 29 days instead of the usual 28. So, while all months have at least 29 days, February is the month where this number can vary depending on whether it's a leap year or not.Answer: February is the only month that has only 29 days in a leap year.Answer: All 12 months have at least 29 days. Every month in a calendar year has a minimum of 29 days. However, February is the only month that typically has 29 days in a leap year.Tree of Thoughts (ToT)

Yao et el. (2023) and Long (2023) recently proposed Tree of Thoughts (ToT), a framework that generalises over chain-of-thought prompting where models can follow different paths of reasoning and evaluate their own decisions to determine what to do next. They also have the capability to plan for the future or revisit past decisions when faced with significant choices.

Code for ToT available on here and here.

Compared to the other frameworks described earlier that lack the ability to plan ahead and backtrack to evaluate different options, ToT overcomes these limitations by framing any task as a search over a tree. The approach involves the following steps:

Thought Decomposition - the problem should be broken down into intermediate steps that the model can follow

Thought Generator - from a given tree node the model should be able to generate and explore different steps. To generate the next

kcandidates, two strategies are considered:Sample Independent and Identically Distributed (i.i.d.) thoughts - involves sampling thoughts from a Chain-of-Thought (CoT) prompt where for each candidate

k, a thoughtz(j)is sampled based on the current states. Works well when the thought space is rich and each thought is relatively large or complex.\(Given \: a \: thought \: generator \: G(p_{\theta},s,k) \: and \: a \: tree \: state \: s=[x, z_{1...i}] then:\)\( z^{(j)} \sim p_{\theta}^{CoT}(z_{i+1}|s)=p_{\theta}^{CoT}(z_{i+1}|x,z_{1...i}) (j=1...k)\)Propose thoughts sequentially - the model proposes thoughts sequentially using a “propose prompt”. A sequence of thoughts is sampled based on the current state

s. Works well when the thought space is more constrained and each thought is relatively small or simple\([z^{(1)},...,z^{(k)}]=p_{\theta}^{propose}(z_{i+1}^{1...k}|s)\)

State Evaluator - evaluates the progress each step made towards solving the problem. Two strategies are presented, which can be used either independently or together:

Value each state independently - each state

sis evaluated independently and given a score e.g., sure/likely/impossibleVote across states - states are evaluated collectively. A “good” state

s*is voted out based on a vote prompt that compares different states inS.

Search Algorithms - depending on the tree structure, different search algorithms can be plugged and played - Breadth-first search (BFS) or Depth-first search (DFS)

Hulbert (2023) has proposed a kind of Tree-of-Thought Prompting based on the framework described above in order to evaluate intermediate thoughts in a single prompt. An example of such a prompt might be:

Imagine three different experts are answering this question.

All experts will write down 1 step of their thinking,

then share it with the group.

Then all experts will go on to the next step, etc.

If any expert realises they're wrong at any point then they leave.

The question is...The mix of human creativity and AI has the potential to reshape how we deal with technology. We're on a journey of ongoing exploration, with researchers, practitioners, and enthusiasts working together to discover new ways generative AI can be creative and useful.

If you enjoyed this first part stay tuned for next week’s newsletter where we’ll dive into other prompting techniques and frameworks like Automatic Reasoning and Tool-use (ART), Automatic Prompt Engineer (APE), Active-Prompt, Directional Stimulus Prompting and ReAct Prompting.