Understanding the Dual-Write Challenge in Multi-System Environments

On the risks of data consistency, the causes behind synchronisation issues, and strategies to mitigate these problems.

The Dual-Write Problem

The dual-write problem is a critical issue that arises when updating multiple systems simultaneously. This challenge significantly impacts data consistency, system reliability, and overall performance in modern software architectures.

At its core, the dual-write problem stems from the difficulty of ensuring that all write operations across different systems either succeed or fail together. Traditional database transactions provide atomicity within a single database, but this guarantee doesn't extend to multiple systems. This limitation creates a risk of partial updates, which can lead to data inconsistencies and compromise the integrity of the overall system.

Failure scenarios further complicate the dual-write problem. System crashes, network failures, and partial outages can occur at any point during the update process, potentially leaving systems out of sync. For instance, if a failure occurs after writing to one system but before updating another, the result is inconsistent data across the environment.

To illustrate the dual-write problem, consider a common scenario in microservice architectures: updating a database and sending a notification message. Ideally, these operations should be atomic to ensure data consistency and reliability. However, the lack of a unified transaction mechanism across different systems makes achieving this atomicity challenging.

If the database update is successful but the event notification fails, the downstream service will not be aware of the change, and the system can enter an inconsistent state.

If the database update fails but the event notification is sent, data could get corrupted, which might affect the reliability of the system.

As organisations continue to adopt microservices and distributed architectures, addressing the dual-write problem becomes increasingly crucial.

The Transactional Outbox Pattern

One way to solve this is through the Transactional Outbox pattern. If we have a database that supports transactional updates, then we can use it to overcome the dual-write problem.

Let’s take the example of a food delivery company system. When a customer places an order, several things need to happen: 1. The order details need to be saved in the database 2. A notification needs to be sent to the restaurant 3. The customer should receive an order confirmation 4. The order should be made available for driver assignment and so on.

The Order Service receives the order request. Then it starts a database transaction. It inserts the order details into the Orders table and in the same transaction, it also inserts messages into the Outbox table. These messages might include: a) OrderCreated event b) NotifyRestaurant message c) SendCustomerConfirmation message. The database transaction is committed, ensuring both the order and the outbox messages are saved atomically.

The Publisher Service runs continuously or at frequent intervals. It queries the Outbox table for unpublished messages. When it finds messages, it reads them and publishes them to the Message Broker. After successful publication, it marks the messages as processed or deletes them from the Outbox table.

The Message Broker receives the published messages from the Publisher Service and routes these messages to the appropriate queues or topics.

Then we have all the Downstream Services. A Restaurant Service might subscribe to the "OrderCreated" event. When received, it notifies the restaurant about the new order. A Notification Service might pick up the "SendCustomerConfirmation" message and sends an order confirmation to the customer. A Driver Assignment Service might listen for "OrderCreated" events to begin the process of assigning a driver.

When to use:

You need to ensure consistency between your database and a message broker.

You want to implement reliable messaging in a microservices architecture.

You need to guarantee that a message is sent if and only if a database transaction commits.

Pros:

Ensures consistency between database and messaging system.

Works well with existing relational databases.

Simpler to implement than full event sourcing.

Doesn't require changing your entire architecture.

Cons:

Doesn't provide a full audit trail like event sourcing.

Requires polling or additional mechanisms to publish messages.

Can add complexity to your database schema.

Change Data Capture

Store the state record to a data store that supports CDC. Then the CDC infrastructure will notify external systems of the changes. One such example would be DynamoDB, using its DynamoDB Streams.

Coming back to the example earlier, in a CDC approach, the Order Service receives the order request, it processes the order and inserts the order details into the Orders table in the database. The CDC tool continuously monitors the database's transaction log. When it detects a change in the Orders table (in this case, a new order insertion), it captures this change. The CDC tool transforms the captured change into a structured event message and publishes the captured change event to a Message Broker/Streams. The event contains details about the new order, such as order ID, customer ID, restaurant ID, and other relevant information. And same as above, multiple Downstream Services can then subscribe to the Order change events from the Message Broker.

When to use:

You want to track changes in your database for replication or integration purposes.

You need to sync data between different systems in near real-time.

You want to implement event-driven architectures without changing your existing systems.

You need to create data lakes or data warehouses from operational databases.

Pros:

Non-intrusive - can be implemented without changing existing applications.

Captures all changes, including those made outside your application.

Can be used with legacy systems.

Supports real-time data integration.

Cons:

May not capture the full business context of changes.

Can be complex to set up and manage.

May impact database performance. CDC can add some overhead to the database, as it needs to read the transaction logs.

Might require specific database features or additional tools.

When the Orders table schema changes, downstream consumers need to be prepared to handle both old and new event formats.

Event Sourcing

Event sourcing is a pattern for storing data or changes to data as events in an append-only log. This represents a business decision to accept the request and start processing it.

The idea of event sourcing is that the event log is the source of truth, and all other databases are projections of the event log. Any write must first be made to the event log. After this write succeeds, one or more event handlers consume this new event and writes it to the other databases. (Cloud Native Patterns by Cornelia Davis (Manning Publications, 2019))

In event sourcing, every change to an entity's state is captured as a discrete, fine-grained event. These events are not merely notifications, each event represents a specific, atomic alteration to the entity's state.

As these state-changing events occur, they are published to interested parties and simultaneously persisted in a chronological log. This log becomes the source of truth for all the changes that have occurred to the entity over time. It's akin to a detailed historical ledger, capturing every nuance of the entity's evolution.

The power of event sourcing lies in its ability to reconstruct an entity state. Subscribers to this event log can process the sequence of events to derive the current state of an entity at any given point in time. By "replaying" the events in order, these subscribers can build a complete and accurate picture of the entity's current condition.

Take the design of an order processing system for a food delivery platform as an example. Event sourcing simplifies this process. All these events (orderCreated, orderAcceptedByRestaurant, orderPreparing etc.) are stored in an append-only log. Each event contains details like timestamp, entity_id, event_type and relevant data. The current state of an order can be reconstructed by replaying all events for that order from the beginning.

When to use:

You need a complete audit trail of all changes.

You want to reconstruct past states of your system.

Your domain model is complex with many state transitions.

You need to support temporal queries (e.g., "What was the state at time X?").

You want to enable easy implementation of new features based on historical data.

Pros:

Provides a full history of changes.

Enables easy debugging and auditing.

Allows for event replay and system recovery.

Supports complex domain modelling.

Cons:

Can be complex to implement and maintain.

May require more storage.

Querying current state can be slower (mitigated by snapshots).

Listen to Yourself

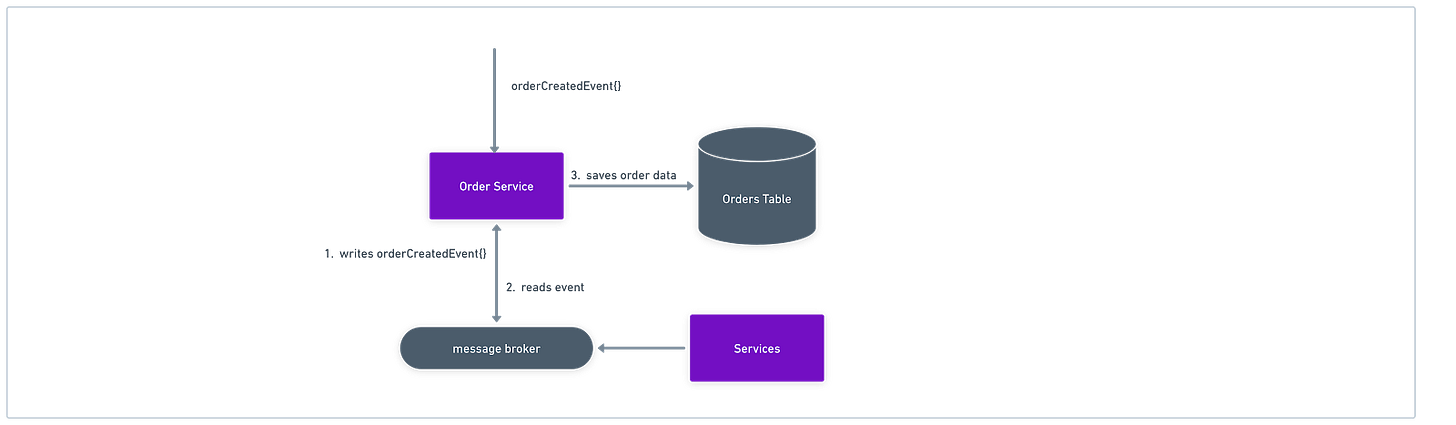

The Listen to Yourself pattern is a variation of event-driven architecture that aims to solve the problem of data consistency between a service's database and its published events. The service publishes events to a message broker as part of its operations. The service then subscribes to its own events from the message broker. Upon receiving its own event, the service updates its database.

The Order Service receives the order request. It prepares the order data and instead of writing directly to the database, it publishes an "OrderCreated" event to the Message Broker. This event contains all necessary order details (order ID, customer ID, restaurant ID, items, total, etc.). The Message Broker receives the "OrderCreated" event from the Order Service and makes the event available to all subscribed services, including the Order Service itself. The Order Service subscribes to its own "OrderCreated" events. When it receives the event it just published: a) It writes the order details to the Orders table in the database. b) It updates its internal state if necessary. Multiple Downstream Services also subscribe to the "OrderCreated" events.

How it's different:

Unlike the Transactional Outbox pattern, it doesn't require a separate outbox table in the database.

It's simpler than full Event Sourcing but still provides a level of eventual consistency.

It differs from CDC in that the service actively publishes events, rather than relying on database log parsing.

Pros:

Simplifies the architecture by using the message broker as the source of truth.

Ensures eventual consistency between the published events and the database state.

Can be easier to implement than some other patterns.

Cons:

There's a potential for temporary inconsistency between the event being published and the database being updated.

It may introduce additional latency due to the round-trip through the message broker.

It can be more difficult to debug, as the flow of data is less straightforward.

Conclusion

We had a look at the different solutions that can be used to solve the dual-write problem. Which approach is used varies based on a given use case, specific requirements or any constraints of the system being designed or modified. To wrap things up, below is a comparison that might help you in making your decision:

Data Consistency:

Event Sourcing: High consistency, events are the source of truth

Transactional Outbox: Strong consistency between database and messaging

Listen to Yourself: Eventual consistency, with potential for brief inconsistencies

CDC: Eventual consistency, with potential for small delays

Complexity of Implementation:

Event Sourcing: High - requires significant architectural changes

Transactional Outbox: Moderate - requires additional table and message relay process

Listen to Yourself: Low to Moderate - requires event publishing and self-subscription

CDC: Moderate - requires CDC tool setup and configuration

Performance Impact:

Event Sourcing: Can be high for read operations, depending on implementation

Transactional Outbox: Low impact on write operations, additional load for message relay

Listen to Yourself: Moderate - additional network round-trip for each operation

CDC: Low to Moderate - some overhead on database for log reading

Scalability:

Event Sourcing: Highly scalable, especially for write operations

Transactional Outbox: Scalable, with separate scaling for application and message relay

Listen to Yourself: Scalable, but may require careful management of self-subscriptions

CDC: Highly scalable, especially for high-volume changes

Auditability:

Event Sourcing: Excellent - full history of all changes

Transactional Outbox: Good - outbox can serve as an audit log

Listen to Yourself: Good - events can serve as an audit log

CDC: Excellent - captures all database changes

Ability to Reconstruct Past States:

Event Sourcing: Excellent - core feature of the pattern

Transactional Outbox: Limited - not a primary feature

Listen to Yourself: Possible but not straightforward

CDC: Possible if all changes are retained, but not a primary feature

Impact on Existing Systems:

Event Sourcing: High - requires fundamental architectural changes

Transactional Outbox: Moderate - requires changes to data access layer

Listen to Yourself: Moderate - requires changes to service logic

CDC: Low - can often be implemented without changing existing applications

Handling of Schema Changes:

Event Sourcing: Challenging - requires careful event versioning

Transactional Outbox: Moderate - affects outbox table and consumers

Listen to Yourself: Moderate - affects event structure and consumers

CDC: Challenging - changes in source database schema need to be carefully managed

Real-time Data Integration:

Event Sourcing: Excellent - events can be processed in real-time

Transactional Outbox: Good - near real-time, depending on relay frequency

Listen to Yourself: Excellent - events are processed immediately

CDC: Very Good - near real-time, with minimal delay

Resilience to System Failures:

Event Sourcing: High - can rebuild state from event log

Transactional Outbox: Good - messages persist in outbox until processed

Listen to Yourself: Moderate - depends on message broker reliability

CDC: Good - can resume from last processed transaction log position

Latency:

Event Sourcing: Can be high for complex queries on current state

Transactional Outbox: Low for writes, potential delay in message processing

Listen to Yourself: Moderate - additional network round-trip

CDC: Low to Moderate - depends on CDC tool and configuration